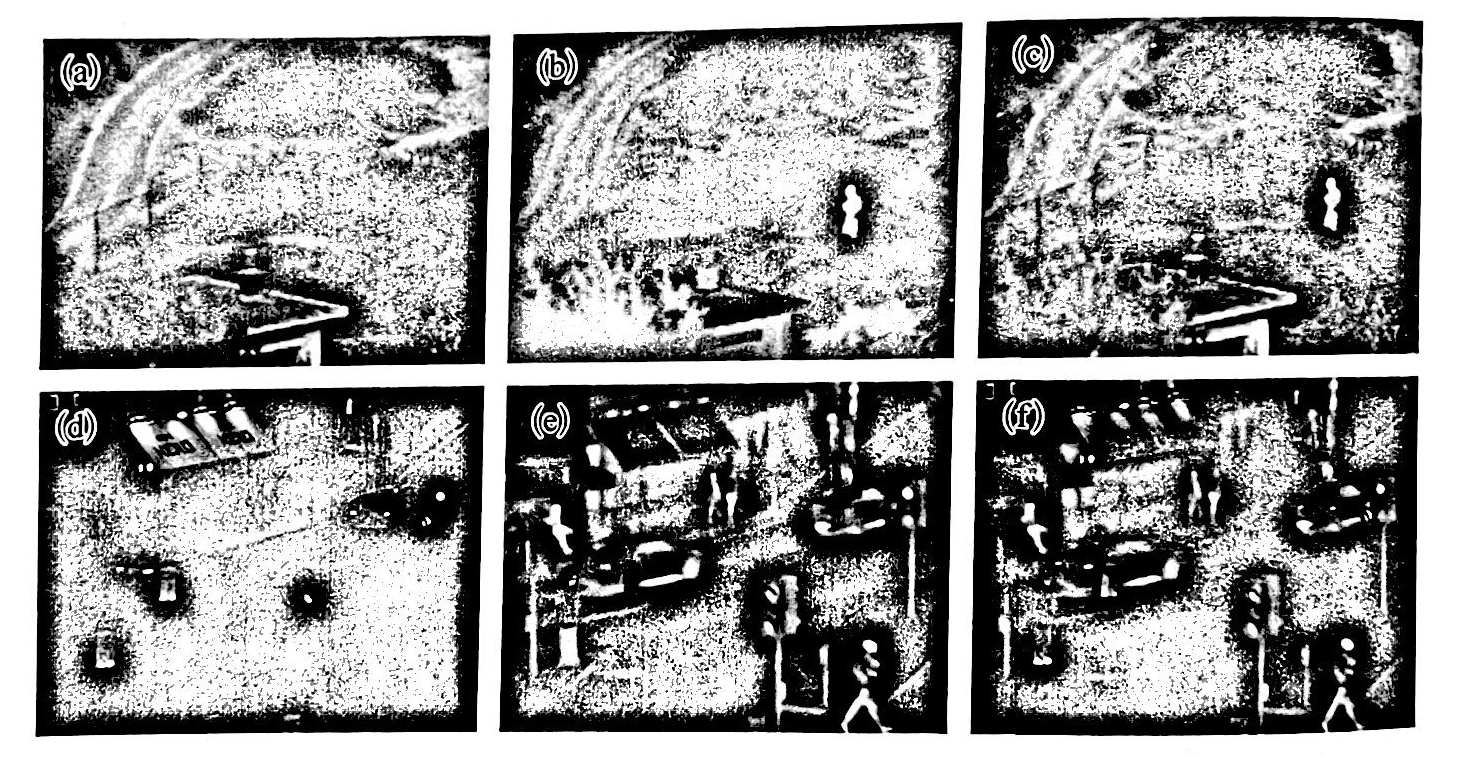

Image fusion refers to combining two or more images into a new image through a specific algorithm. Taking advantage of the redundancy and complementarity of information between images of the same scene acquired by multi-source image sensors at the same time or at different times, image fusion can make up for the geometric, spectral, and spatial resolution of a single image sensor by synthesizing multi-source image information. In order to obtain a more accurate and comprehensive description of the scene, it can expand the working range of the system and improve the reliability. As shown in the figure below, the fused image of visible light image and thermal infrared image has both types of image information, which is conducive to further processing of information.

1) The level of image fusion

According to the level of information representation from low to high, the level of image fusion includes pixel-level fusion, feature-level fusion and decision-level fusion. The general framework of the image fusion system including these three levels is shown in the figure below.

Pixel-level fusion is the lowest level of image fusion. This layer directly fuses the pixels of each source image to retain as much scene information as possible, which is beneficial to target observation and image feature extraction. Pixel-level image fusion requires strict image registration between source images, and the registration accuracy should generally reach the pixel level. Because it involves the processing of all pixels of two or more images, the amount of data to be processed is often large, and the calculation load is relatively heavy.

Feature-level fusion is an intermediate level of image fusion. This hierarchical synthesis extracts feature information, such as edges, shapes, textures, angles, etc., from two or more source images. Feature-level fusion preserves important information of images while achieving information compression. This is not only beneficial to real-time processing, but also can directly improve the system's decision-making performance because the extracted feature information provides a direct basis for analysis and decision-making. At present, the methods to achieve feature-level fusion mainly include five categories: probability and statistics methods, logical reasoning methods, neural network methods, feature extraction-based fusion methods, and search-based fusion methods.

Decision-level fusion is the highest level of image fusion. It combines the sub-decisions obtained from each source image according to certain rules to obtain the global optimal decision, which is used as the direct basis for system control. Decision-level image fusion has good real-time and fault-tolerant capabilities, but the cost of preprocessing is high and information loss is large.