The statistical classification method works under the framework of pattern recognition theory, and its working steps can be simply expressed as "target feature extraction + classifier judgment", in which the target feature is used to describe the characteristics of the candidate target, and the classifier determines the target based on the target feature. type.

Target features include global features and local features. The global feature takes the entire human body as the description object. The advantage is that the framework is simple and easy to implement. The disadvantage is that the human body is treated as a whole, ignoring the non-rigidity of the human body, so it is not flexible enough to deal with occlusion, different poses, and changes in perspective. Typical global features include moment features, contour transform features, wavelet coefficient features, histogram of oriented gradient (HOG) features, Edgelet (micro-edge) features, and Shapelet (micro-shape) features.

(1) Features.

Moment features include Hu moment, Zernike moment, second-order moment, etc. The moment feature has translation, rotation and scale invariance under certain conditions, but due to the characteristics of the moment function itself, it is difficult to synthesize the target shape features represented by low-order moments and high-order moments, and it is often only used for simple human body. Some parts of the target are described. Other features must be combined when describing complex human targets.

(2) Contour transformation features.

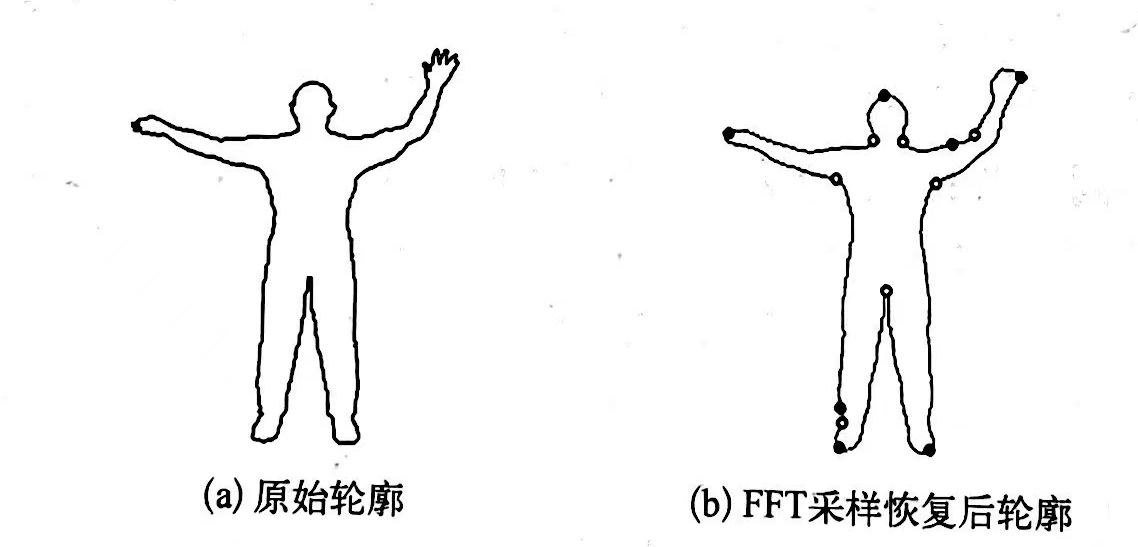

The contour transformation feature is obtained by performing a certain transformation on the set of contour points of the target after obtaining the contour of the target. Take the following figure as an example, perform Fourier transform on the original human contour, and then sample a certain number of Fourier transform coefficients to describe the contour. When detecting or identifying candidate targets, the Euclidean distance between the Fourier transform coefficients of the target contour sampling points and the Fourier transform coefficients of the template sampling points is calculated, thereby judging the posture to which the target belongs.

(3) Wavelet coefficient features.

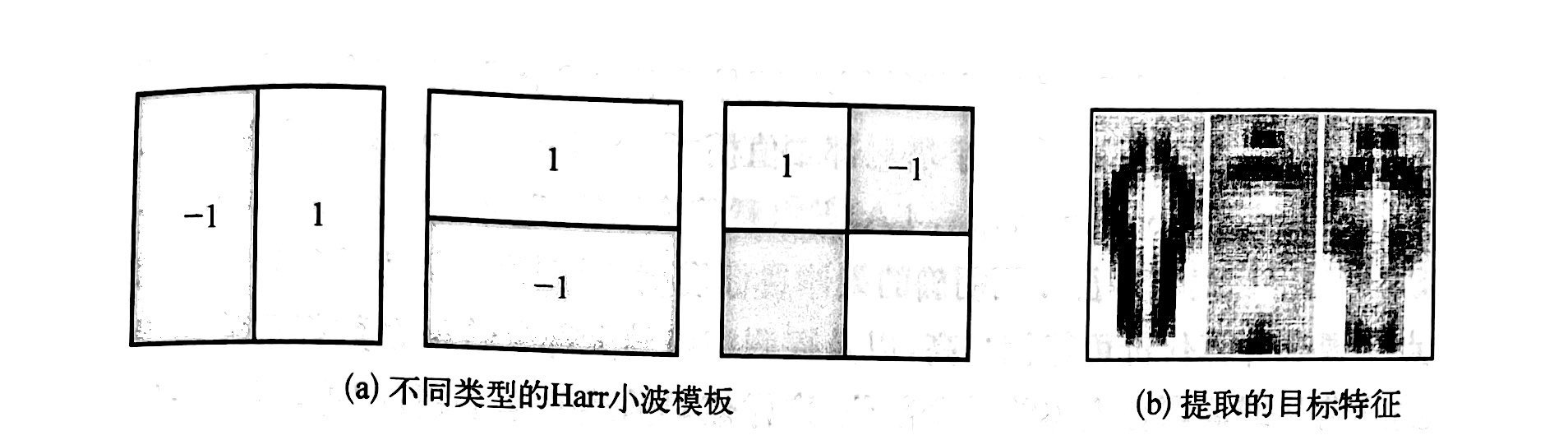

Haar-like is a typical wavelet coefficient feature (rectangular feature). Figure 44 shows several types of Haar-like features. A Haar-like feature refers to the difference between the gray average values of the white area and the black area, and is often calculated by the integral graph method. Haar-like features can better represent regions with significant changes. In order to reflect the grayscale changes in different directions and different scales, a huge number of various types of Haar-like features are needed, so methods such as Adaboost are often used to select important features.

Gabor wavelet and Log-Gabor wavelet are also often used to generate human target features due to their good texture analysis capabilities, such as the maximum direction energy histogram feature based on Log-Gabor wavelet and the energy entropy feature based on dual-tree complex wavelet DTCWT.

(4) Gradient histogram feature.

The gradient histogram feature [106] is to divide the recognized object area into several blocks, each block is divided into several grids, and the distribution of the gradient values of all pixels in each grid in all directions is calculated to obtain a feature vector, and then the block is divided into several grids. The eigenvectors of all grids are concatenated to obtain the eigenvectors of the block, and then the eigenvectors of all blocks are concatenated as the eigenvectors of a sample. The gradient histogram feature is very effective because it can well describe the edge features of the human body and is insensitive to illumination changes and small offsets.

(5) Edgelet features.

Edgelet features [104] are dedicated to expressing human edges. Each Edgelet is a small edge that describes the outline of a certain part of the human body. The Boosting algorithm can be used to filter out the most effective set of Edgelets to describe the human whole. When extracting Edgelet features, the Sobel operator can be used to calculate the edge. The advantages of Edgelet features are many. First, it is less affected by light. Second, it takes into account both the intensity and direction information of the edge, so that the edge in the background with a shape similar to the given Edgelet can be excluded by the difference of the edge direction. Third, each Edgelet is only responsible for describing a local area, thereby improving the flexibility of describing human contours and reducing the amount of computation. Fourth, Edgelets are generated with certain rules, and then automatically screened by machine learning algorithms, thus improving algorithm intelligence.

(6) Shapelet features.

Unlike the most valuable Edgelets, which are automatically selected, Shapelets [107] are automatically generated using machine learning methods. First, extract the edges of the training sample images in four directions: 0°, 45°, 90° and 135°, then use Boosting to filter the edges in the same small window of the four edge maps to obtain a Shapelet feature, and finally analyze the shapelet obtained by training. Then use Boosting to train the final strong classifier. Compared with EdgeletShapelet, it can bring higher recognition rate.

Different from the above global features, local features are generally obtained based on dense scans of the entire target area. Typical local features include pyramid local binary pattern (LBP) [108], local averages (LA) [109], sparse representation (SR) [110] and so on. The external features do not need a clear human target model, and the robustness to occlusion is very good. The main disadvantage is that the computational cost may be quite high, and most of the characteristic coefficients of the detection window may be used to describe the background or limited to a certain part of the human body, which makes the description inefficient; in addition, there may be multiple Describe the results, so they need to be refined after the scan is complete.

The classifier in the statistical classification method is used to accept the input of the target feature, and then judge the category of the candidate target based on the input feature. Classifiers such as Boosting Cascade, SVM, and ANN have been widely used in human target recognition.

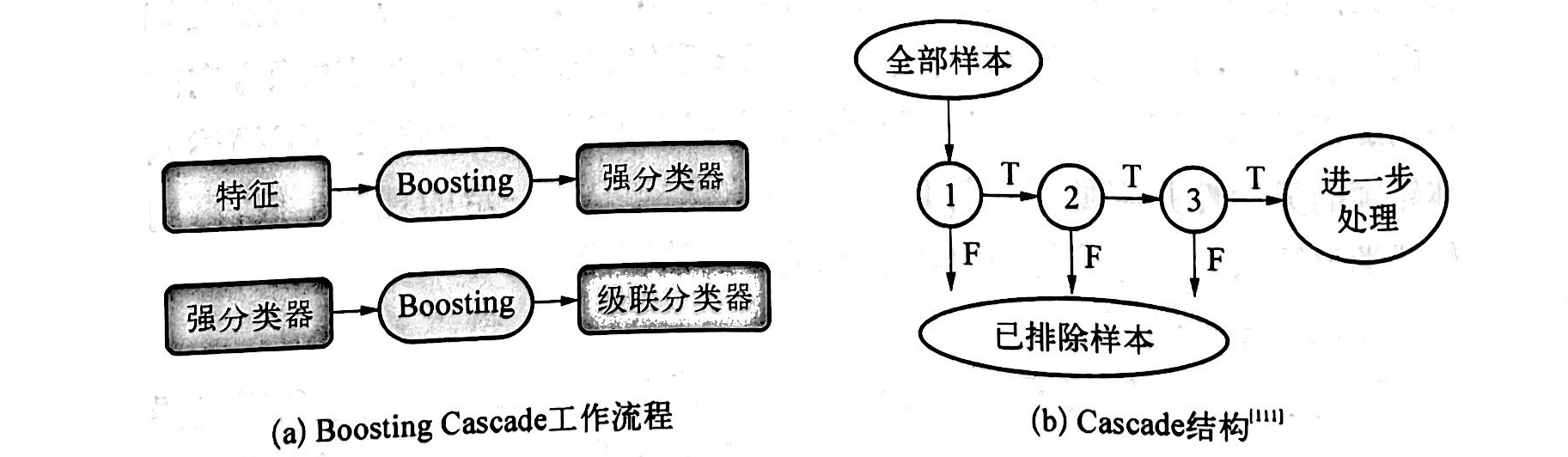

In Boosting Cascade, Boosting calls those rules that have certain value but not necessarily true as weak classifiers. As long as weak classifiers are better than random guessing, their combination can constitute a strong classification with arbitrary accuracy and good generalization ability. device. Taking the most common Adaboost in Boosting as an example, it can select a small part of key features (hundreds to thousands) from a huge rectangular feature set to generate an effective strong classifier. Cascade is a chain structure, which is formed by cascading a set of base classifiers whose classification ability is gradually enhanced. Once a certain level of base classifiers excludes a certain sample, the sample will not be further processed. This structure quickly excludes background regions, saving time for computations on regions that are more like human objects. BoostingCascade is the combination of Boosting and Cascade, and its structure is shown in Figure 45. Based on its excellent characteristics, people have made a lot of improvements to the basic Boosting Cascade, such as BoostingFloat BoostingBoostChain and Soft Cascade with asymmetric loss.

Compared with BoostingCascade, the k-nearest neighbor (k-NN) classifier is a single classifier with a rather simple structure. It searches out the top k training samples with the closest distance to the test sample, and determines the class of the test sample as the class of the learning sample with the largest number. Since k-NN needs to measure the similarity between test samples and all training samples in turn, when the training sample set is large, the corresponding calculation amount is also large. In addition, since k-NN essentially assigns equal weights to each training sample, the classification results are susceptible to ill-conditioned samples or large biased samples.

SVM overcomes the above problems of k-NN classifiers. Based on the principle of structural risk minimization, it selects the training samples (called "support vectors") with the strongest category description ability near the boundary of the decision function, and when determining the test sample category, only this part of the samples, that is, the support vectors, participates in the decision-making, so Better classification performance than k-NN can be obtained. However, the amount of computation corresponding to the SVM classification process is related to the scale of the training sample set, and the traditional SVM only outputs the binarized results, which cannot give the membership degree of the classification results. In addition, the kernel function of the SVM needs to meet the Mercer condition, but this condition is sometimes difficult Satisfy.

ANN also has good results in human body recognition, but it is often difficult to determine its parameters. In recent years, as the latest development of ANN, DNN has been gradually applied in human body recognition. DNN changes the manual feature extraction of traditional algorithms to automatic feature extraction, and organically integrates this link with the classification process. DNN can help design more informative feature sets while being more computationally efficient than traditional models. The core of DNN lies in the network topology, especially the number of hidden layers, which greatly affects the classification performance and running time of the algorithm. In recent years, researchers have proposed DNN models such as CNN, SPP-NT, FasterR-CNN, Siamese Convolution Network, and DR-Net, which are used for human feature extraction, pedestrian recognition, human tracking, and pose estimation, and have achieved certain results.